Shared Task

|  |

|

|

News

24/05/2012: The data are available for download.

09/05/2012: The results and the list of accepted papers have been added.

03/04/2012: Updated information about the system description paper. New deadline: 16 April 2012.

03/04/2012: The final version of the evaluation script and the results have been sent to participants.

16/03/2012: The test dataset has been distributed to participants.

15/03/2012: A new version of the evaluation script for the scope detection task has been distributed to participants.

13/03/2012: A new version of the evaluation script for the scope detection task has been distributed to participants.

09/03/2012: A new version of the CD-SCO dataset has been distributed to participants.

29/02/2012: A new version of the CD-SCO dataset has been distributed to participants.

22/02/2012: A new version of the CD-SCO dataset has been distributed to participants.

19/02/2012: The schedule has been changed. Check Important Dates.

17/02/2012: Evaluation script for the scope detection task has been released.

12/02/2012: Evaluation script for the focus detection task has been released.

7/02/2012: Registration is still possible.

7/02/2012: The training and development datasets have been released.

20/01/2012: LDC will provide during competition time an evaluation license to obtain free of charge the tokens corresponding to the PB-FOC dataset. Thus, all necessary annotations will be available to participants.

16/01/2012: First call for participation.

Scope and focus of negationNegation is a pervasive and intricate linguistic phenomenon present in all languages (Horn 1989). Despite this fact, computational semanticists mostly ignore it; current proposals to represent the meaning of text either dismiss negation or only treat it in a superficial manner. This shared task tackles two key steps in order to obtain the meaning of negated statements: scope and focus detection. Regardless of the semantic representation one favors (predicate calculus, logic forms, binary semantic relations, etc.), these tasks are the basic building blocks to process the meaning of negated statements. Scope of negation is the part of the meaning that is negated and focus the part of the scope that is most prominently negated (Huddleston and Pullum 2002). In the example (1), scope is enclosed in square brackets and focus is underlined:

Scope marks all negated concepts. In (1) the statement is strictly true if an event saying did not take place, John was not the one who said, as much is not the quantity of said or before the time. Focus indicates the intended negated concepts and allows to reveal implicit positive meaning. The implicit positive meaning of (1) is that John had said less before. This shared tasks aims at detecting the scope and focus of negation. TasksTwo tasks and a pilot task are proposed:

TracksAll tasks will have a closed and open track.

DatasetsTwo datasets are provided, one for Task 1, and another for Task 2.

Data formatFollowing previous shared tasks, all annotations will be provided in the CoNLL-2005 Shared Task format. Very briefly, each line corresponds to a token, each annotation (chunks, named entities, etc.) is provided in a column; empty lines indicate end of sentence. A sample of data will be provided very soon. EvaluationEvaluation will be performed as follows:Task 1: Scope detection

Task 2: Focus detection

Pilot: Scope and focus detection Cancelled!

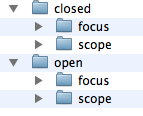

The evaluation scripts will be provided with the training datasets. SubmissionsParticipants will be allowed to engage in any combination of tasks and submit a maximum of two runs per track. Submissions should be made by sending an e-mail to the organizers with a zip or tar.gz file. The compressed file should be named according to the following convention: <SurnameName_of_registered_participant>-semst-submission-<run_number><test set name>.<extension>. The files should be organised in a directory structure as in the image below. The test set names for Task 1 are: circle, cardboard. The test set name for Task 2 is: pb.

Submissions can be sent by e-mail to the organisers until the 27th of March, 2012 (24:00, UTC-11:00). Submission of papersParticipants are invited to submit a paper describing their system. The paper should include the system description and an evaluation of the system performance, which should include error analysis. Papers should clearly describe the methods used in sufficient detail to ensure it is reproducible. The system description papers must be submitted no later than April 16, 2012 (24:00 pm GMT-7). The only accepted formats for submitted papers is PDF. Papers should be submitted using the START system: https://www.softconf.com/naaclhlt2012/STARSEM2012/ In "Submission Categories" you should choose "Shared Task" from the "Papers" pull down menu. Papers should follow the NAACL 2012 guidelines: http://www.naaclhlt2012.org/conference/conference.php with the exception that paper reviewing will *not* be blind, so you can include authors' names, affiliations, and references in the submitted paper. Additionally, the following restrictions apply to system description papers:

References and related work

Organisation - contactRoser Morante, CLiPS-Computational Linguistics, University of Antwerp, Belgium.

Eduardo Blanco, Lymba Corporation, USA You can contact us by sending a mail to the addresses below. Please, indicate in the subject [*sem-st].

|

|||||||||||||||||||||||||||||